Fine-tuning is a crucial process for enhancing the performance of large language models (LLMs) in specific applications. Here’s a breakdown of its importance based on the provided knowledge:

1. Adaptation to Specific Tasks

- Importance: Fine-tuning allows pre-trained models to be adapted for specific tasks or domains, which is essential for improving their accuracy and relevance. General-purpose models may not perform well on specialized tasks, leading to inaccurate or misleading outputs.

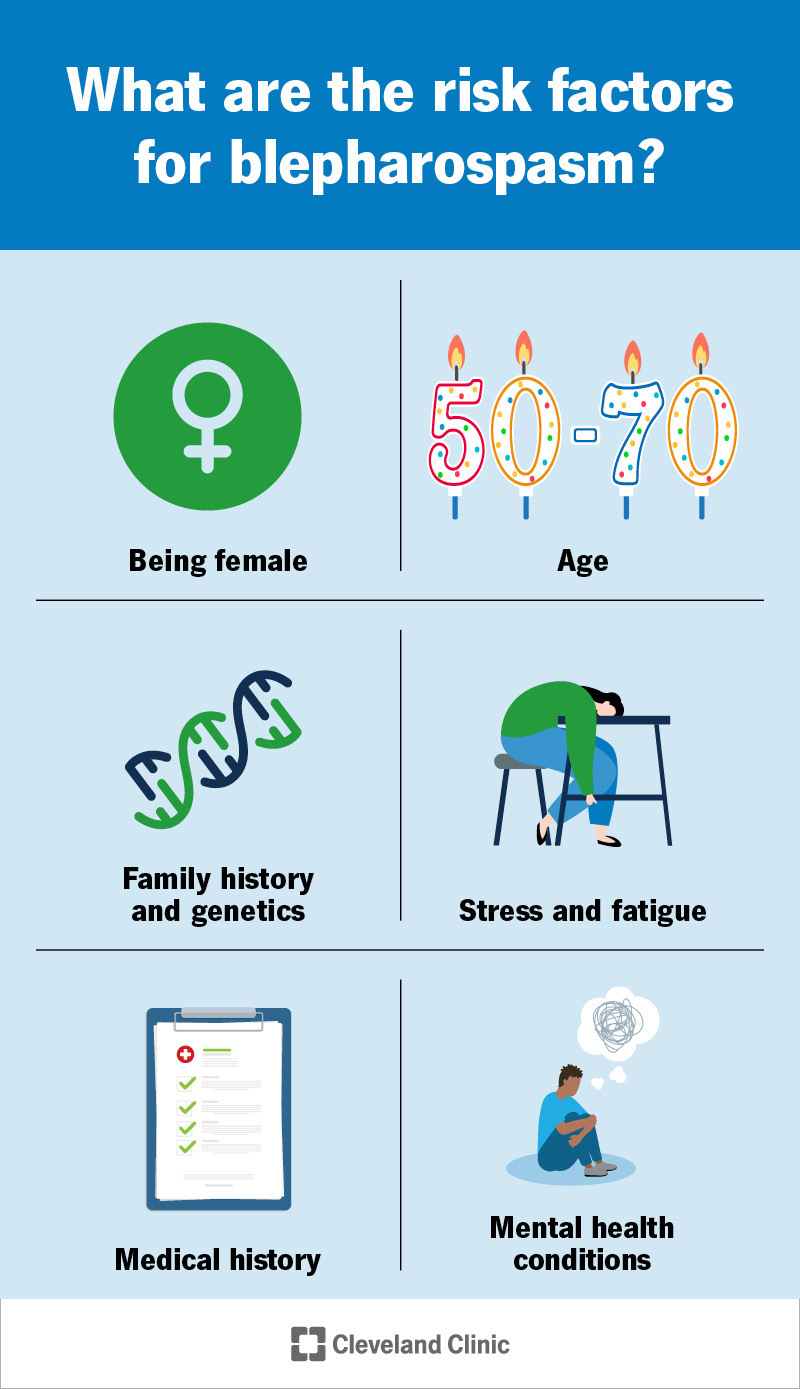

- Example: For complex tasks like legal or medical text analysis, incorporating domain-specific knowledge through fine-tuning is often necessary.

2. Improving Quality and Reliability

- Importance: Fine-tuning can significantly enhance the quality and reliability of model outputs. It helps mitigate issues like hallucinations (untrue statements) that can harm credibility.

- How: By fine-tuning with accurate and representative data, models can produce more reliable outputs tailored to the specific context.

3. Handling Data Limitations

- Importance: LLMs have limitations in prompt size and may struggle with generating relevant outputs without fine-tuning. Fine-tuning can help the model handle more data effectively.

- Best Practices: Using various data formats and ensuring a large, high-quality dataset can improve performance during fine-tuning.

4. Task Complexity and Specificity

- Importance: Fine-tuning is particularly beneficial for narrow, well-defined tasks. The complexity of the task often dictates the extent of improvement from fine-tuning.

- Considerations: Easier tasks tend to show more significant improvements, especially when using parameter-efficient methods like LoRA.

5. Resource Considerations

- Importance: While fine-tuning can lead to performance improvements, it is also resource-intensive. It requires computational power, time for data collection and cleaning, and expertise in machine learning.

- Evaluation: Proper evaluation of fine-tuned models is crucial, as it can be sensitive to prompt engineering and requires ongoing infrastructure support.

6. Real-World Applications

- Importance: Fine-tuned models are widely used in various applications, including sentiment analysis, named entity recognition, and language translation. They enable organizations to leverage LLMs for specific business needs effectively.

- Examples: Specialized models like BloombergGPT for financial sentiment analysis demonstrate the practical benefits of fine-tuning.

7. Best Practices for Fine-Tuning

- Recommendations:

- Start with a pre-trained model and focus on relevant data.

- Experiment with different hyperparameters and data formats.

- Use techniques like multitasking and parameter-efficient fine-tuning to optimize performance while managing resource constraints.

Conclusion

Fine-tuning is essential for maximizing the potential of LLMs in specific applications. It enhances model performance, reliability, and adaptability, making it a critical step in deploying LLMs effectively. However, the decision to fine-tune should be based on careful evaluation of task complexity, data availability, and resource constraints.